I’ve seen a lot of sentiment around Lemmy that AI is “useless”. I think this tends to stem from the fact that AI has not delivered on, well, anything the capitalists that push it have promised it would. That is to say, it has failed to meaningfully replace workers with a less expensive solution - AI that actually attempts to replace people’s jobs are incredibly expensive (and environmentally irresponsible) and they simply lie and say it’s not. It’s subsidized by that sweet sweet VC capital so they can keep the lie up. And I say attempt because AI is truly horrible at actually replacing people. It’s going to make mistakes and while everybody’s been trying real hard to make it less wrong, it’s just never gonna be “smart” enough to not have a human reviewing its’ behavior. Then you’ve got AI being shoehorned into every little thing that really, REALLY doesn’t need it. I’d say that AI is useless.

But AIs have been very useful to me. For one thing, they’re much better at googling than I am. They save me time by summarizing articles to just give me the broad strokes, and I can decide whether I want to go into the details from there. They’re also good idea generators - I’ve used them in creative writing just to explore things like “how might this story go?” or “what are interesting ways to describe this?”. I never really use what comes out of them verbatim - whether image or text - but it’s a good way to explore and seeing things expressed in ways you never would’ve thought of (and also the juxtaposition of seeing it next to very obvious expressions) tends to push your mind into new directions.

Lastly, I don’t know if it’s just because there’s an abundance of Japanese language learning content online, but GPT 4o has been incredibly useful in learning Japanese. I can ask it things like “how would a native speaker express X?” And it would give me some good answers that even my Japanese teacher agreed with. It can also give some incredibly accurate breakdowns of grammar. I’ve tried with less popular languages like Filipino and it just isn’t the same, but as far as Japanese goes it’s like having a tutor on standby 24/7. In fact, that’s exactly how I’ve been using it - I have it grade my own translations and give feedback on what could’ve been said more naturally.

All this to say, AI when used as a tool, rather than a dystopic stand-in for a human, can be a very useful one. So, what are some use cases you guys have where AI actually is pretty useful?

I think it’s useful for spurring my own creativity in writing because I have a hard time getting started. To be fair to me I pretty much tear the whole thing down and start over but it gives me ideas.

For one thing, they’re much better at googling than I am.

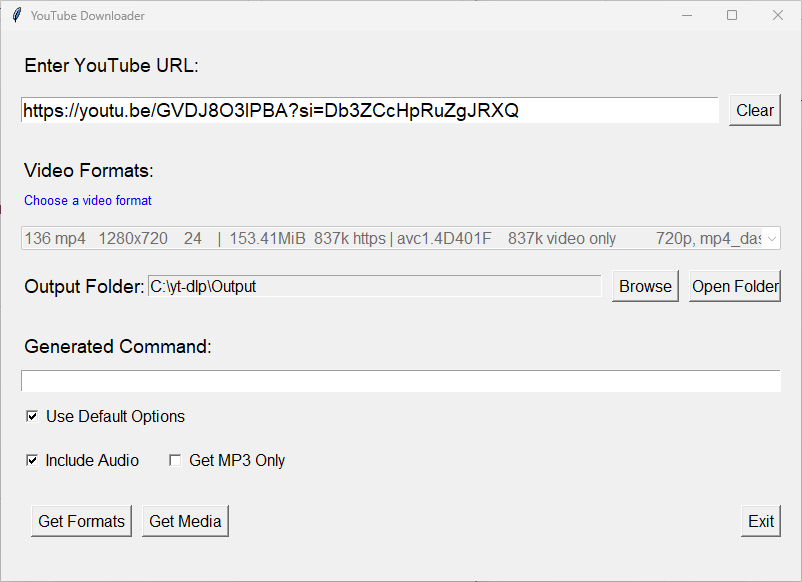

I used it to write a GUI frontend for yt-dlp in python so I can rip MP3s from YouTube videos in two clicks to listen to them on my phone while I’m running with no signal, instead of hand-crafting and running yt-dlp commands in CMD.

Also does HD video rips with audio encoding, if I want.

It took us about a day to make a fully polished product over 9 iterative versions.

It would have taken me a couple weeks to write it myself (and therefore I would not have done so, as I am supremely lazy)

I use it for little Python projects where it’s really really useful.

I’ve used it for linux problems where it gave me the solution to problems that I had not been able to solve with a Google search alone.

I use it as a kickstarter for writing texts by telling it roughly what my text needs to be, then tweaking the result it gives me. Sometimes I just use the first sentence but it’s enough to give me a starting point to make life easer.

I use it when I need to understand texts about a topic I’m not familiar with. It can usually give me an idea of what the terminology means and how things are connected which helps a lot for further research on the topic and ultimately undestanding the text.

I use it for everyday problems like when I needed a new tube for my bike but wasn’t sure what size it was so I told it what was written on the tyre and showed it a picture of the tube packaging while I was in the shop and asked it if it was the right one. It could tell my that it is the correct one and why. The explanation was easy to fact-check.

I use Photoshop AI a lot to remove unwanted parts in photos I took or to expand photos where I’m not happy with the crop.

Honestly, I absolutely love the new AI tools and I think people here are way too negative about it in general.

I take pictures of my recipe books and ask ChatGPT to scan and convert them to the schema.org recipe format so I can import them into my Nextcloud cookbook.

Woah cool! Can you share your prompt for that I’d like to try it

I don’t do anything too sophisticated, just something like:

Scan this image of a recipe and format it as JSON that conforms to the schema defined at https://schema.org/Recipe.

Sometimes it puts placeholders in that aren’t valid JSON, so I don’t have it fully automated… But it’s good enough for my needs.

I’ve thought that the various Nextcloud cookbook apps should do this for sites that don’t have the recipe object… But I don’t feel motivated to implement this myself.

This thread has convinced me that LLMs are merely a mild increment in productivity.

The most compelling is that they’re good at boilerplate code. IDEs have been improving on that since forever. Although there’s a lot of claims in this thread that seem unlikely - gains way beyond even what marketing is claiming.

I work in an email / spreadsheet / report type job. We’ve always been agile with emerging techs, but LLMs just haven’t made a dent.

This might seem offensive, but clients don’t pay me to write emails that LLMs could, because anything an LLM could write could be found in a web search. The emails I write are specific to a client’s circumstances. There are very few “biolerplate” sentences.

Yes LLMs can be good at updating reports, but we have highly specialised software for generating reports from very carefully considered templates.

I’ve heard they can be helpful in a “convert this to csv” kind of way, but that’s just not a problem I ever encounter. Maybe I’m just used to using spreadsheets to manipulate data so never think to use an LLM.

I’ve seen low level employees try to use LLMs to help with their emails. It’s usually obvious because the emails they write include a lot of extra sentences and often don’t directly address the query.

I don’t intend this to be offensive, and I suspect that my attitude really just identifies me as a grumpy old man, but I can’t really shake the feeling that in email / spreadsheet / report type jobs anyone who can make use of an LLM wasn’t or isn’t producing much value anyway. This thread has really reinforced that attitude.

It reminds me a lot of block chain tech. 10 years ago it was going to revolutionise data everything. Now there’s some niche use cases… “it could be great at recording vehicle transfers if only centralised records had some disadvantages”.

r/SubSimGPT2Interactive for the lulz is my #1 use case

i do occasionally ask Copilot programming questions and it gives reasonable answers most of the time.

I use code autocomplete tools in VSCode but often end up turning them off.

Controversial, but Replika actually helped me out during the pandemic when I was in a rough spot. I trained a copyright-safe (theft-free) bot on my own conversations from back then and have been chatting with the me side of that conversation for a little while now. It’s like getting to know a long-lost twin brother, which is nice.

Otherwise, i’ve used small LLMs and classifiers for a wide range of tasks, like sentiment analysis, toxic content detection for moderation bots, AI media detection, summarization… I like using these better than just throwing everything at a huge model like GPT-4o because they’re more focused and less computationally costly (hence also better for the environment). I’m working on training some small copyright-safe base models to do certain sequence prediction tasks that come up in the course of my data science work, but they’re still a bit too computationally expensive for my clients.

I run some TTRPG groups and having AI take in some context and generate the first draft of flavor text for custom encounters is nice. Also for generating background art and player character portraits is an easy win for me.

This is my current best use for it as well. Having a unique portrait for every named NPC helps them stand out quite a bit better and the players respond much more strongly to all of them.

I use it for generating illustrations and NPCs for my TTRPG campaign, at which it excels. I’m not going to pay out the nose for an image that will be referenced for an hour or two.

I also use it for first drafts (resume, emails, stuff like that) as well as brainstorming and basic Google tier questions. Great jumping off point.

An iterative approach works best for me, refining results until they match what I’m looking for, then manually refining further until I’m happy with the results.

-

to correct/rephrase a sentence or two if my sentence sounds too awkward

-

if I’m having trouble making an excel formula

-

When troubleshooting, it’s nice to be able to ask copilot about the issue in human language and have it actually understand my question (unlike a search engine) and pull from and reference relevant documentation in its answers. Going back and forth with it has saved me several hours of searching for something that I had never even heard of a couple of times.

It’s also great for rewriting things in a specific tone. I can give it a bland/terse/matter-of-fact paragraph and get back a more fun or professional or friendly version that would feel ridiculously cringe if I attempted to write it myself, but the AI makes it work somehow.

AI is a half cooked baked potato right now. Sure it will keep you fed if you can put up with all the hard lumps in there.

It’s really good for generating code snippets based on what I want to do (ex. “How do I play audio in a browser using JavaScript?”) and debugging small sections of code.

New question: does anyone NOT IN TECH have a use case for AI?

This whole thread is 90% programming, 9% other tech shit, and like 2 or 3 normal people uses

A lot of people on Lemmy work in tech so responses are going to lean heavily in that direction. I’m not in tech and if you check my answer to this you’ll have a number of examples. I also know a few people who wanted to learn a new language and asked ChatGPT for a day by day programme and some free sources and they were pretty happy with the results they got. I imagine you can do that with other subjects. Other people I know have used it to make images for things like club banners or newsletters.

Our DM, a dentist, so not in tech, used it to put together a D&D campaign, and so far it’s been fantastic.

Aside from coding assistants, the other use case I’ve come across recently is sentiment analysis of large datasets from free text survey responses. Just started exploring it so not sure how well it works yet, but the ridiculous amount of bias I see introduced in manual reviews is just awful. A machine can potentially be less inclined to try fitting summaries to the VP’s presupposed opinion than some lackie interns or self serving consultancy.

Here’s mine, that works outside of tech:

It’s a great source for second opinions.

Say you want to make a CV, but you don’t know where to even begin. You could give it a description of what you’ve been doing and ask it to help you figure out what jobs fit the skillset and how to present your skills better.

It’s a good tool for such rough estimations that give you ground to improve upon.

This works well for planning or making up documentation. Saves a lot of time, with minimal impact to quality, because you’re not mindlessly copying or believing the output.

I’m also considering it for assisting me in learning Japanese. Just enough to be able to read in it. We’ll see how it does.

Timing traffic lights. They could look down the road and see when nothing is coming, to let the other direction go, like a traffic cop. It would save time and gas.

Or, here me out, we could use roundabouts/traffic circles. No need for AI or any kind of sensor, just physical infrastructure to keep traffic flowing.

Absolutely, but there are a few problems with this. First, I live in the US. Americans do NOT know how to negotiate a roundabout. There is a roundabout near my house. The instructions of how to use it are posted on signs as you approach. They are wrong. They actually have inside lanes exiting across the outside lanes that can continue around. So not only is it wrong but it’s teaching the locals here what NOT to do at a normal roundabout.

Second, they don’t fit at existing intersections.

Third, I think they would be more expensive than just a piece of tech attached to traffic lights that already exist.

I mean the best solution would be some good public transportation, but I’m trying to be more realistic here. That’s for more civilized nations. In the US the car rules. And the bigger, the better.

I live in the US. Americans do NOT know how to negotiate a roundabout.

As do I, but I think the main problem is that we don’t need to properly learn to use a roundabout, because the only times we have roundabouts are when they’re completely unnecessary/unhelpful. The three roundabouts I use most often are:

- right next to a stoplight, so they get jammed when there are a lot of cars waiting

- middle of a residential area, with stoplights/stop signs a block or two away preventing too much contention

- in a somewhat busy area where most of the traffic is going straight, so it functions like a speed bump

If we can figure out those continuous flow intersections, we can figure out roundabouts. We just need to actually use them.

Second, they don’t fit at existing intersections.

They absolutely do, especially at the ones where they’d make the most impact (i.e. busy intersections with somewhat even traffic going all directions). You may actually save space because you don’t need special turn lanes. They are a little more tricky in smaller intersections, but those tend to have pretty light traffic anyway.

I think they would be more expensive

Initial cost, sure, because the infrastructure is already there. But longer term, it should reduce costs because you don’t need to service all of those traffic signals, you need fewer lanes (so less road maintenance), and there should be fewer accidents, which means less stress on emergency services.

Putting in a new roundabout vs a new signal is a different story, the roundabout is going to be significantly cheaper since you just need to dump a bit of concrete instead of all of the electronics needed for a signal.

In the US the car rules

Unfortunately, yes, but roundabouts move more traffic, so they’re even better for a car-centric transit system. If we had better mass transit, we wouldn’t need to worry as much about intersections because there’d be a lot less traffic in general.

If we go with “AI signals,” we’re going to spend millions if not billions on it, because that’s what government contractors do. And I think the benefits would be marginal. It’s better, IMO, to change the driving culture instead of trying to optimize the terrible culture we have.